The EU AI Act & Education: What Schools and Universities Must Know (2025 Guide)

Why the EU AI Act Matters to Education Institutions

The European Union’s Artificial Intelligence Act (EU AI Act) is the world’s first comprehensive law designed to regulate the development and use of artificial intelligence. Adopted in 2024, it establishes a framework to ensure AI systems used across the EU are safe, transparent, and respect fundamental rights. Much like the GDPR reshaped data protection worldwide, the AI Act is expected to set a global standard for trustworthy AI.

The Act introduces a risk-based approach, classifying AI systems into four categories:

Unacceptable risk: completely banned, e.g. social scoring by governments.

High risk: allowed but subject to strict obligations, e.g. AI in education, healthcare, or employment.

Limited risk: transparency obligations, e.g. chatbots that must disclose they are AI.

Minimal risk: no restrictions, e.g. spam filters or video game AI.

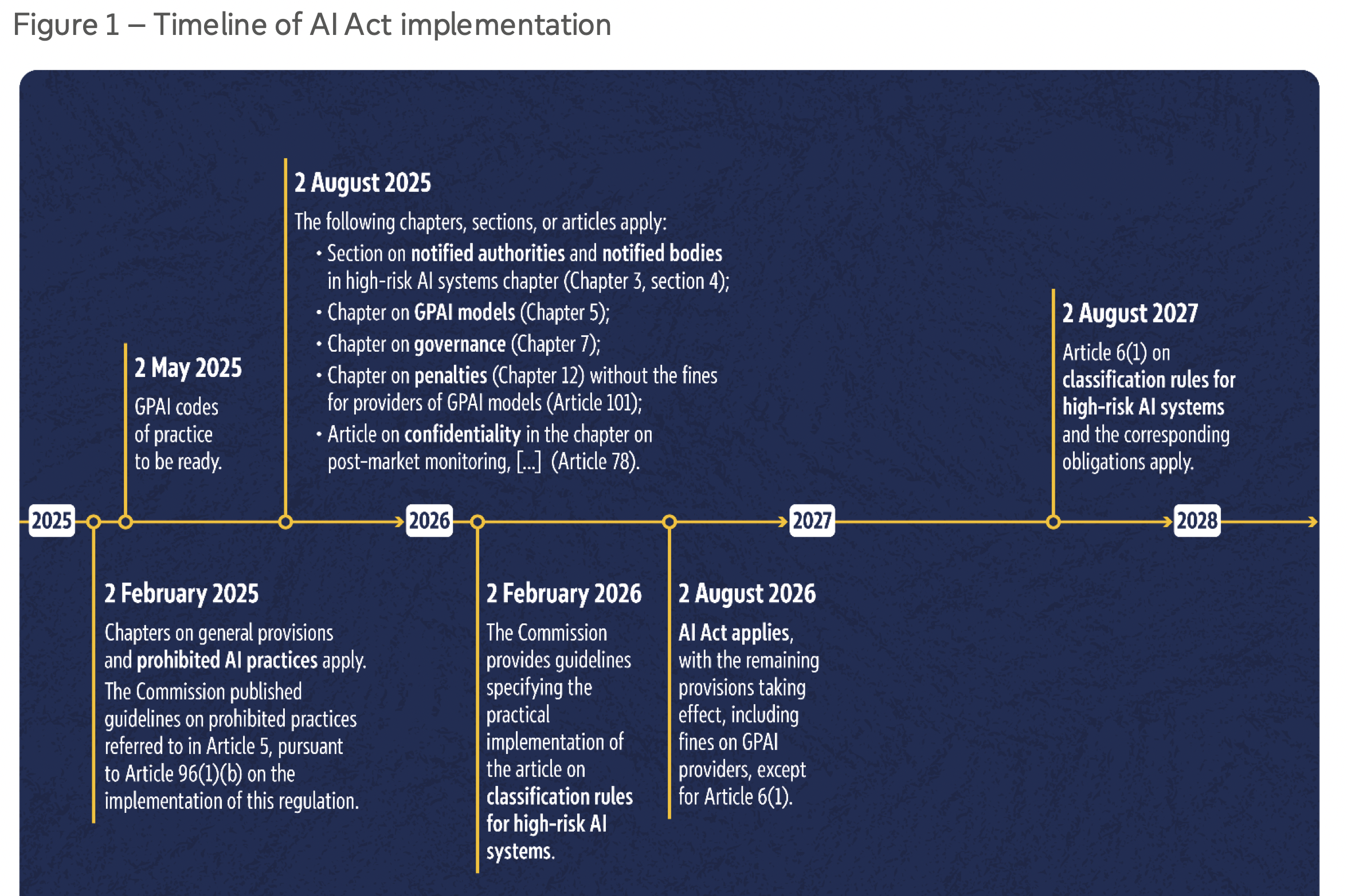

The first obligations begin in February 2025, and by August 2026, full compliance will be required across high-risk sectors, including education.

For schools and universities, this means that tools used for admissions, grading, monitoring, or tutoring may now fall under the high-risk category, bringing new compliance requirements. Institutions that act early will gain trust, reduce risks, and position themselves as responsible adopters of AI.

Timeline for implementing the EU AI Act

Imagine received via AI Act implementation timeline by the European Parliament.

What the AI Act Regulates & What “High-Risk” Means for Education

The AI Act classifies AI systems by risk: unacceptable, high risk, limited risk, and minimal. It also includes general-purpose AI (GPAI) rules.

Education & vocational training use cases are listed in Annex III (thus considered high-risk). This includes AI systems that determine access/admission, evaluate learning, assign students, or monitor exam behavior.

For high-risk systems, providers must follow stricter rules: risk management, documentation, oversight, cybersecurity, accuracy, transparency, traceability, and human oversight.

Some AI practices are outright banned under Article 5, such as systems using subliminal manipulation, emotion recognition in decision-making, or exploiting vulnerabilities (e.g., age, disability) to distort behavior.

All AI systems, even those with limited risk like chatbots, may have transparency obligations. Users may need to know they’re interacting with AI.

Find out more in the official summary of the AI Act:

https://artificialintelligenceact.eu/high-level-summary/

Key Compliance Obligations Starting in 2025

- From 2 February 2025, institutions using or deploying AI systems must ensure AI literacy among staff and relevant personnel (per Article 4).

- The law requires that training be proportional to technical knowledge and context of use.

- As of August 2025, obligations for general-purpose AI models begin, and by August 2026, high-risk system rules will fully apply.

- Institutions may be penalized for non-compliance (e.g., fines, liability) especially when AI misuse causes harm.

What Educational Institutions Should Do Now: A Compliance Checklist

Map your AI usage: Identify which AI systems your institution uses or plans to use (admissions, grading, chatbots, proctoring, recommendation engines).

Classify risk: Determine if a system falls under high-risk (Annex III) vs limited risk. Document and justify your classification.

Ensure AI literacy training: Train all relevant staff. Record who took the training, training materials, and assessment of comprehension.

Ensure student and parent transparency: Clearly communicate when AI is used in admissions, grading, proctoring, or student support systems. Transparency builds trust and fulfills the Act’s requirement to inform users when interacting with AI.

Safeguard against bias and discrimination: Regularly assess AI systems for bias in admissions or grading outcomes. Document measures to ensure fairness, especially for vulnerable groups (students with disabilities, language minorities, or disadvantaged backgrounds).

Review prohibited practices: Ensure no system you use engages in banned behaviors (emotion recognition for evaluation, manipulative personalization, covert manipulation).

Demand compliance from vendors: When purchasing or licensing AI tools, require contractual guarantees that they follow AI Act obligations.

Build documentation & traceability: Maintain audit trails, records of system changes, logs of decisions, risk assessments, and conformity reports.

Plan for human oversight and transparency: Ensure workflows allow humans to override AI decisions; inform users when AI is in use; ensure fairness and non-discrimination.

Monitor & adapt: Once rules take full effect, perform periodic reviews, incident reporting, and ongoing evaluation of your AI systems.

Risks of Inaction

- Legal liability if AI systems cause harm or bias

- Loss of trust from students, parents, regulators

- Penalties and fines under GDPR‑style enforcement

- Future retrofitting becomes more expensive

Conclusion

The EU AI Act is not just a distant regulation, it is a major shift that will shape how schools and universities use AI in admissions, teaching, assessment, and student support. Institutions that start preparing now will not only avoid penalties but also gain a competitive advantage. By ensuring transparency with students and families, safeguarding against bias, and building AI literacy among staff, education providers can show they are serious about responsible and ethical innovation.

This is also a chance to build trust. Parents want to know their children are treated fairly, students want reassurance that technology won’t undermine their opportunities, and regulators want proof that institutions can manage AI responsibly. Those who act early will stand out as leaders in the European education landscape.

At StudyfinderAI, we design our platform with compliance and transparency at its core, giving schools and universities tools that are future-proof, safe, and aligned with EU requirements. Together, we can ensure AI in education is used to empower - not endanger - students’ futures.

PS: You can follow the latest news about the EU AI Act via: https://artificialintelligenceact.eu